AI has moved from experimental pilots to business-critical infrastructure. But as organizations scale adoption, one uncomfortable truth is emerging: you can’t protect what you can’t see .

Without visibility into how models, agents, and data behave in real time, enterprises face blind spots that can turn innovation into risks that can have costly consequences, damage the company’s reputation, and erode trust. According to Accenture, “Gen AI exposes organizations to a broader threat landscape, more sophisticated attackers and also new points of attack.”

The Problem With Black-Box AI

Traditional monitoring tools weren’t built for the complexity of the increasingly complex and evolving AI ecosystems.

Models are retrained, prompts evolve, and agents interact autonomously, often faster than governance teams can keep up. Without observability, performance issues, hallucinations, and most importantly security breaches remain hidden until they cause real damage which is both disruptive and costly.

Imagine a financial chatbot that begins offering misleading investment advice, or a customer service agent that unknowingly leaks private data. Without lineage and runtime visibility, root-causing these issues could take weeks, while customer trust erodes overnight.

What Breaks Without Observability

The lack of visibility translates directly into six critical business risks:

- Silent Model Drift: Models quietly lose accuracy and relevance as data changes daily, leading to flawed predictions and biased outcomes. Drift can also mask early indicators of data manipulation or poisoning.

- Hidden Data Exfiltration: Prompt injection and malicious tool use can lead to the unintentional exposure of confidential data, API keys, or proprietary model logic. Without runtime logging and anomaly detection, these leaks go undetected until significant damage has occurred.

- Hallucinations in Production: LLMs are powerful and occasionally overconfident. Without observability, detecting when responses deviate from factual or policy-aligned norms is difficult, especially in regulated sectors.

- Shadow AI and Policy Gaps: Teams experimenting with unvetted tools and external models often bypass enterprise controls. These “shadow AI” deployments increase the risk of unauthorized data sharing and compliance violations.

- Audit and Compliance Risk: Regulations like the EU AI Act, National Institute of Standards and Technology (NIST) AI Risk Management Framework (RMF) AI RMF, and ISO 42001 emphasize traceability and explainability. Missing lineage or incomplete telemetry can lead to failed audits and regulatory fines.

- Cost and Performance Waste: Unoptimized prompts, runaway agents, and oversized models inflate computing costs. Spiking compute patterns can also signal exploitation attempts or denial-of-service attacks.

The Scale of the Problem

Without observability, enterprises face compounding risks:

- 5–10% performance decay in weeks due to drift- Model performance can degrade quickly as data distributions shift. Peer-reviewed work and industry tutorials document measurable accuracy decay within weeks if drift isn’t monitored. Directionally, single-digit to low double-digit drops are common depending on domain and seasonality. National Library of Medicine

- Incident detection delays stretching from minutes to weeks- In security programs generally, IBM/Ponemon’s benchmark shows breach lifecycles are still measured in hundreds of days without strong automation. Organizations with fully deployed security AI/automation cut average identification/containment to approximately 247 days vs. 324 days without it, evidence that lack of telemetry and automation materially slows detection and response. AI-driven observability/AIOps studies similarly report material Mean Time to Resolve (MTTR) improvements when rich telemetry is present. Cost of a Data Breach Report 2025

- Remediation times doubling when there’s no lineage- When teams lack lineage and end-to-end traceability, root-cause analysis and remediation take significantly longer. Multiple analyses highlight that missing lineage prolongs investigations and increases failure costs compared with environments that capture lineage and runtime context. (The benchmarks above on lifecycle/MTTR reductions with better telemetry reinforce this point.) DataScience Show - Why Ignoring Data Lineage Could Derail Your AI Projects

- 15–30% operational waste from unmonitored token and model usage- FinOps and cost-management research shows AI/cloud spending frequently overruns due to poor visibility into usage metrics (e.g., tokens, GPU hours). 2025 studies report widespread forecasting misses and overspend; cloud/AI costs rise sharply without cost observability and quotas, implying double-digit waste bands are common until controls mature. DataScience Show - Why Ignoring Data Lineage Could Derail Your AI Projects

The takeaway: Lack of insight amplifies risk across accuracy, resilience, and economics.

What “Good” AI Observability Looks Like

Leading organizations are moving beyond static dashboards to continuous, end-to-end visibility across every layer of the AI ecosystem. True observability doesn’t just monitor; it detects, correlates, and protects in real time.

- Lineage and Inventory Tracking- Maintaining a unified inventory of all models, datasets, and agents establishes the foundation for governance and defense. Lineage visibility shows data origins, transformations, and dependencies, enabling rapid forensics and precise impact analysis during security or compliance incidents.

- Runtime Capture of Prompts and Interactions- Securely logging prompts, responses, and tool activity helps detect misuse such as prompt injection, unauthorized access, or data exfiltration. Redaction safeguards privacy while maintaining full traceability for investigation and accountability.

- Always-On Evaluations for Bias, Safety, and Accuracy- Continuous assessment ensures outputs remain factual and policy-aligned. These checks not only prevent bias and misinformation but also provide audit-ready evidence of responsible AI behavior.

- Drift and Anomaly Detection- Monitoring data and output patterns reveals deviations from normal behavior. Early detection of drift or anomalies flags potential data poisoning, adversarial input, or unauthorized system use before damage occurs.

- Threat Telemetry and Attack Detection- Integrating telemetry to track injection attempts, agent loops, or abnormal API activity transforms observability from passive monitoring into active defense, providing real-time protection against evolving AI threats.

- Governance Dashboards Linked to Policy Controls- Regulations like the EU AI Act and National Institute of Standards and Technology (NIST) AI Risk Management Framework (RMF) demand explainability and traceability. Governance dashboards connect model usage, access, and outcomes to compliance requirements, simplifying audits and reinforcing trust with regulators and stakeholders.

Together, these capabilities create a living map of your AI ecosystem—a real-time, adaptive view that strengthens trust, speeds response, and enables secure, scalable innovation.

Metrics That Matter

AI is no longer a black box, but without observability, it might as well be. As organizations scale AI across mission-critical workflows, visibility into model behavior, data lineage, and runtime security becomes a prerequisite for trust. Metrics transform abstract observability into actionable intelligence, quantifying how secure and compliant AI is.

Every model interaction, prompt, and output generates signals. Without measuring those signals, security risks like data leakage, model drift, or prompt attacks remain invisible until it’s too late. By defining and tracking key observability metrics, enterprises can move from reactive firefighting to proactive assurance, where governance, security, and performance are continuously validated.

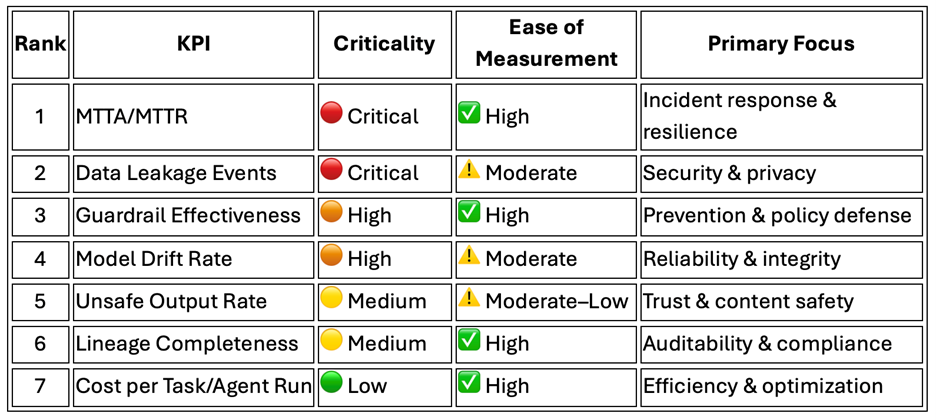

To manage what you can’t see, you need clear indicators of AI health and security. These metrics translate into measurable performance and trust.

- Mean Time to Detect/Resolve (MTTA / MTTR) – Critical (Easy to track from incident logs.)Measures how quickly issues are found and fixed. Low MTTA and MTTR signal strong visibility and fast response, essential for containing model drift, data leaks, or prompt attacks before damage spreads.

- Data Leakage Events (per 10k requests) – Critical (Requires runtime monitoring and redaction analysis.)Tracks how often sensitive data escapes through outputs or APIs. Each event signals potential compliance or reputational exposure, making this one of the most important observability metrics.

- Guardrail Effectiveness (% blocked vs. escaped attempts) – High (Straightforward to track via model logs and policy engines.)Shows how well controls stop unsafe or malicious activity. High effectiveness reflects resilient defenses against prompt injections, jailbreaks, and policy breaches.

- Model Drift Rate – High (Moderately difficult, requires periodic validation and baseline testing.)Captures how fast model accuracy deteriorates as data or context changes. Persistent drift can indicate data poisoning or bias.

- Unsafe or Hallucinated Output Rate – Medium (Harder to quantify; may require human review or automated labeling.)Measures how often outputs deviate from factual or policy-aligned standards. High rates weaken user trust and expose governance gaps.

- Lineage Completeness – Medium (Easier to automate through metadata tracking.)Assesses how well every model, dataset, and change is documented and auditable. Full lineage supports compliance (EU AI Act, NIST AI RMF) and fast incident forensics.

- Cost per Successful Task or Agent Run – Low (Easily measured through FinOps and usage analytics.)Monitors operational efficiency and helps detect anomalies or runaway compute costs. Useful for financial control but secondary to safety metrics.

These KPIs turn observability into accountability, aligning technical telemetry with business impact. They also create better audit traceability for faster response time and remediation.

Why It Matters Now

In the era of generative and autonomous AI, observability has become the foundation of digital trust. It turns security from a reactive defense into proactive awareness, detecting, explaining, and adapting in real time. There is a clear need for this, according to research from IBM, 97% of organizations that reported an AI-related security incident lacked proper AI access controls.

As AI becomes embedded in every workflow, enterprises need more than tools, they need a unified platform that provides end-to-end visibility across data, models, and agents. Fragmented monitoring can’t keep pace with dynamic model behavior, real-time user interactions, or regulatory expectations. A cohesive observability layer connects these signals into one view, enabling faster response and more reliable governance.

Platforms like Grafyn AI’s are designed to meet that need, combining runtime monitoring, lineage tracking, and security analytics into an integrated fabric. This ensures organizations can scale AI confidently while maintaining compliance, resilience, and control.

Without such observability, AI remains a black box. With it, businesses gain the clarity to innovate securely, earn trust, and adapt to the accelerating landscape of intelligent automation.

Research Sources & Industry Insights

- Accenture (2025). Understand new threats, prepare and respond quickly to attacks

- Accenture (2024). Redefining resilience: Cybersecurity in the generative AI era

- McKinsey (2025). Seizing the agentic AI advantage

- Deloitte (2025). Cybersecurity meets AI and GenAI

- Gartner (2025). Top10 Strategic Technology Trends for 2026

At Grafyn, We Believe Seeing Is Securing.

Our AI Security Fabric gives enterprises the visibility and control they lack today—unifying observability,governance, and real-time threat protection into a single platform. By turning blind spots into actionable insight, Grafyn AI helps organizations detect risks earlier, meet compliance with confidence, and scale AI responsibly without sacrificing speed or trust. The result: safer innovation and faster growth.

.svg)